We’ve been in stealth mode for the last 18 months. Working tirelessly on our new AEON motion scanning system. Something that has taken us over 5 years to develop from inception to reality.

.

Today’s inspiration:

[soundcloud url=”https://api.soundcloud.com/tracks/243092703″ params=”” width=” 100%” height=”166″ iframe=”true” /]

.

The word aeon /ˈiːɒn/, also spelled eon (in American English) and æon, originally meant “life”, “vital force” or “being”, “generation” or “a period of time”, though it tended to be translated as “age” in the sense of “ages”, “forever”, “timeless” or “for eternity”.

Carrying on from our motion scanning research back in 2011, we’ve been working on finalizing our “OutsideIn” motion scanning system. Trying to understand the capture, storage and playback obstacles.

The most popular usage of Videogrammetry dates back to the late 90’s. It was used extensively by George Borshukov, Kim Libreri and the team on The Matrix films. It’s since been used in various forms in VFX and games. Most notably in the Harry Potter films using a custom built rig by MPC and more recently by Digital Air in Ghost in the Shell. It’s not a new concept.

This pioneering work was a catalyst of inspiration for us for many years. It’s since spawned many similar variations whether it be the incredible work by Oliver Bao and team at Depth Analysis (L.A Noire), or the pioneering stereo pair approach by Colin Urquhart and team at Dimensional Imaging (DI4D), or active IR pattern scanning like 3DMD or the phosphorescent paint approach by the now troubled MOVA Contour. Not forgetting the incredible high fidelity research by Disney Research Zürich

Or using other techniques like green screen silhouette reconstruction by the visionaries at 4DViews and Microsoft Research. 4DViews really paved the way for free view point media.

Our system is a little different to the others as it does not rely on green screen silhouette reconstruction, no active scanning, no stereo pairs or low end USB cameras. We came at this problem from the hardest possible angle. High-end, high resolution per pixel reconstruction using machine vision and modern software tools like Agisoft Photoscan or Capturing Reality. We use cameras that can record RAW footage (at up to 12-Bit) at 6K resolution, streamed to disk at up to 3.4GB/s. Our base line capture rate is 30fps but we can also shoot at 60fps or 120fps depending on ROI used. Higher frame rates will reduce capture resolution.

This has been a huge technical challenge, something that has taken us over 5 years to solve since our early days in human scanning 10 years ago. It’s also been expensive to develop on our own.

..we dream of using USB point grey cameras at 2K resolution.. but that would be far to easy!

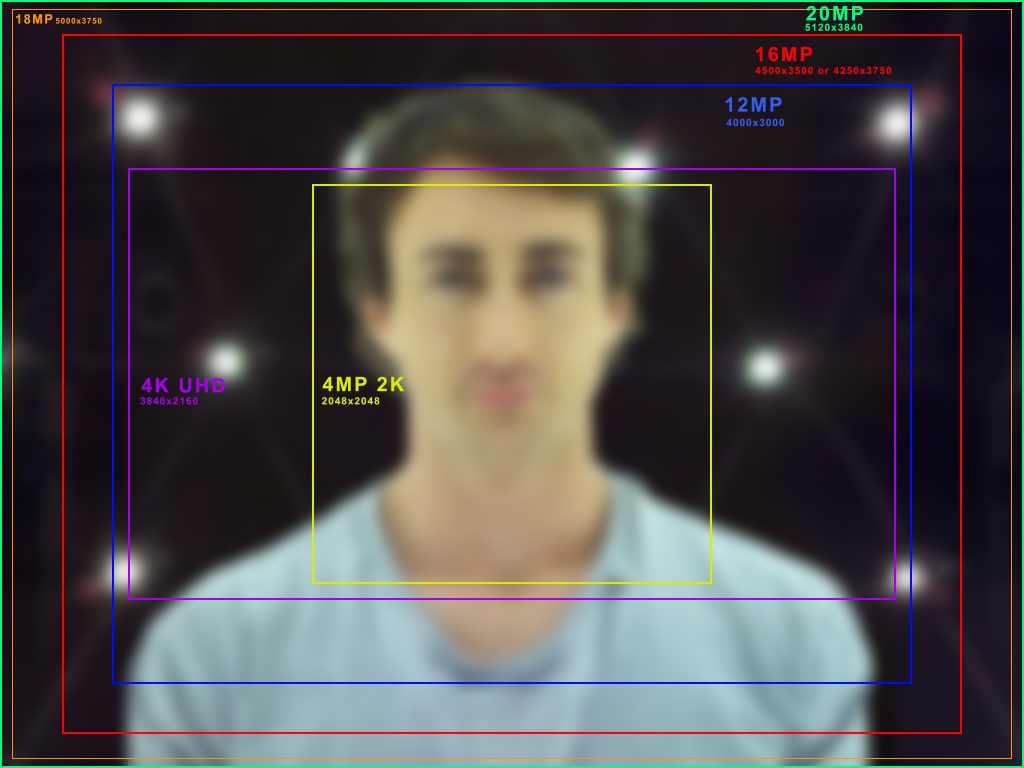

Our 6K cameras in resolution comparison to 2K

Terms that have appeared over the last few years in relation to this field. Motion scanning, volumetric capture, holographic, video scanning, videogrammetry, lightfields. All are equally relevant.

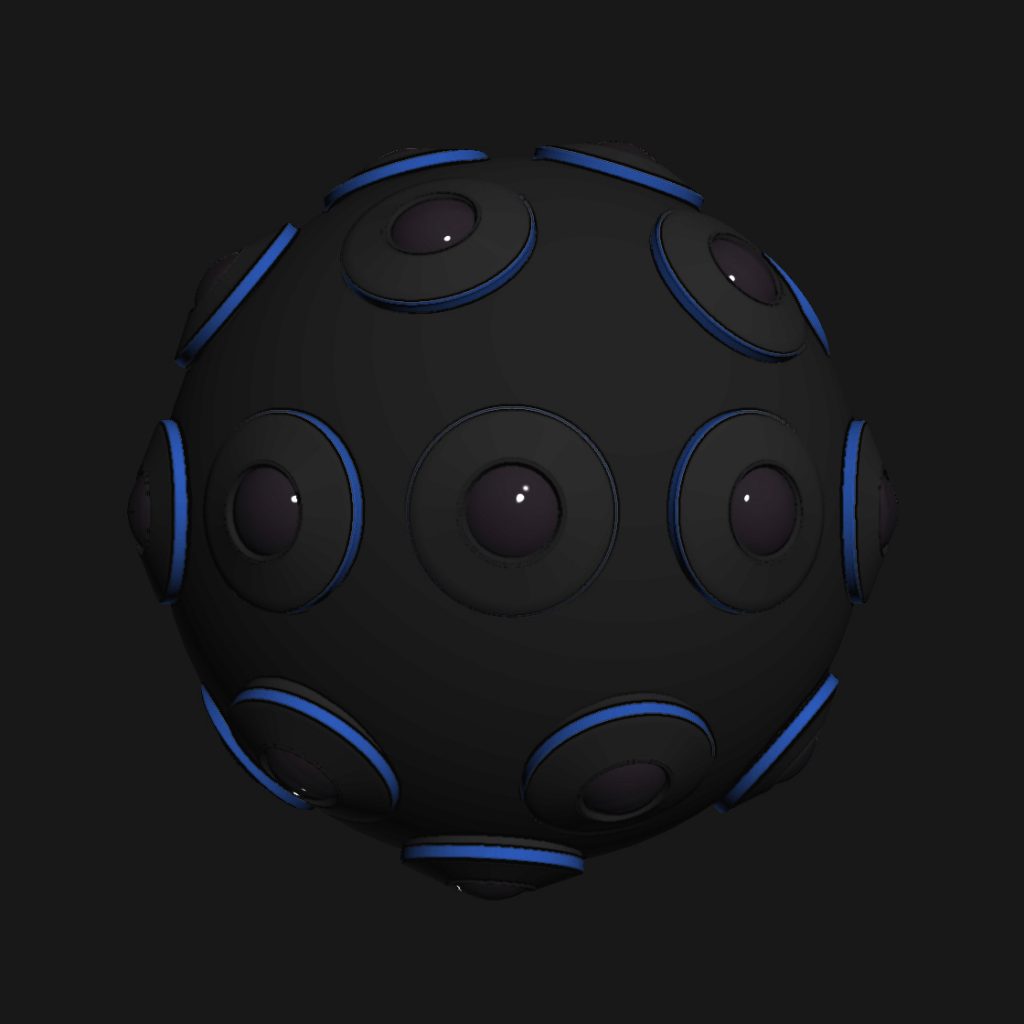

Here’s a typical example of an “InsideOut” Lightfield capture system, similar to what Facebook showcased recently

So we reversed that and we’ve designed and built our own “OutsideIn” Motion Scanning System, part IR, part IDA-Tronic in design.

Some of our early primitive motion scanning tests carried out with DSLR research dating back to 2011

These were our first tests using standard DSLR cameras set to HD. We could have continued with this research but the problem with these cameras is their resolution is too low, they cannot be synchronised (hand synced by sound .wav in After Effects), they suffer from rolling shutter artefacts and they can only output heavily compressed movie files. We needed access to the RAW streamed data at a higher bit depth, no rolling shutter, higher resolution and synced. Only up until the last 6 months was this really possible at the higher end.

This all costs money and this is why it has taken us so long to proceed. We had to self fund all this research, without VC backing. Everything had to be built and designed from the ground up. Custom capture software, custom built recording hardware, storage configurations, software storage, custom cloud processing farm, network solutions.. you name it.

We now rely on synchronized 6K (full frame) multi machine vision cameras (global shutter) at 30fps, genlocked.

We used to build 180x DSLR Static photogrammetry rigs, which is a breeze in comparison. We’ve built a few 150+ DSLR systems in the past. I remember trying to sync 10 cameras back in 2009 for our old website IR-Ref, it was hard. Syncing and downloading the data was very complex to do reliably. Then scaling up was even harder… Now imagine syncing cameras with light, not once every 5 minutes but 30 times.. a second, per camera!! and storing that data. We’re talking terabytes of data. Not even taking into account processing it. It’s a MAMMOTH undertaking.

You need robust tools to operate such a system, you’re like a DJ spinning plates. You have to be aware of and monitor 10’s of 1,000’s of images being recorded at once. Not just 180 images per scan, 10,000’s, sometimes several 10,000’s of images. Scaling up to a full body system it will be 100,000’s of images per take.

However over the last 12 months, we’ve solved these problems. The hard-work has been done. It’s now just a case of scaling up the system.

We’re are now starting to see some interesting results, even with our limited 16x camera setup. We would never build a 16x DSLR face capture system. The problem with machine vision is, it’s expensive to scale up. USB and GoPro cameras (at this moment in time) are not the solution. Most professional commercial cameras will set you back £10,000’s per camera and syncing multi-camera arrays is difficult. Machine vision was the cheapest and easiest option.

Another big issue we faced is lighting. You need a TON of light. However you need to make sure the amount of light used is not going to blind your subjects!! The reason you need so much light is, we are pushing the machine vision sensors to their absolute limits. We need fast exposure speeds to limit motion blur, somewhere around 1-5ms any faster and the output images are too dark and we will start to see PWM on certain lighting sources. These settings also introduce high levels of noise and banding which have to be rectified. We have to shoot at a high aperture because we need deep depth of field. These are very difficult shooting conditions. In general though 3D reconstruction software can be very forgiving with regards to noise and low light levels, as long as, you have perfect camera synchronization.

None of this research would have been possible without the help, research and support of from IDA-Tronic and our custom tools programmers “S.C” and “Kennux”. Esper Design in their early inception helped us a great deal. Our system has since evolved into something very different.

Here’s an example of one of our first processed sets in Capturing Reality

This scan set was processed by hand, frame by frame. This one tweet in 2 days got more likes and retweets than all of our Twitter page likes and tweets combined since we joined in 2009! This gave us some confidence that the results are starting to show merit.

Capturing Reality, whilst fast and can produce some really sharp scan output it’s really lacking with timeline support. Reality Capture team were really accommodating with us and very supportive to give guidance and ideas on how to process motion scan data but the application is just lacking proper timeline support for processing and editing motion scans. I hope someday they will offer full support as it’s a great piece of software and becoming a standard in the photogrammetry field.

Agisoft Photoscan however (from IR’s suggestions and testing) has had timeline support included since 2012. This is massive bonus. You process the data just like you would a regular scan set, the only difference is that the scan chunk has all the sequence frames embedded. The first frames alignment is reused for all subsequent frames. You can also edit any frame range you want, cut, trim, paste or merge frames together. It’s incredibly powerful.

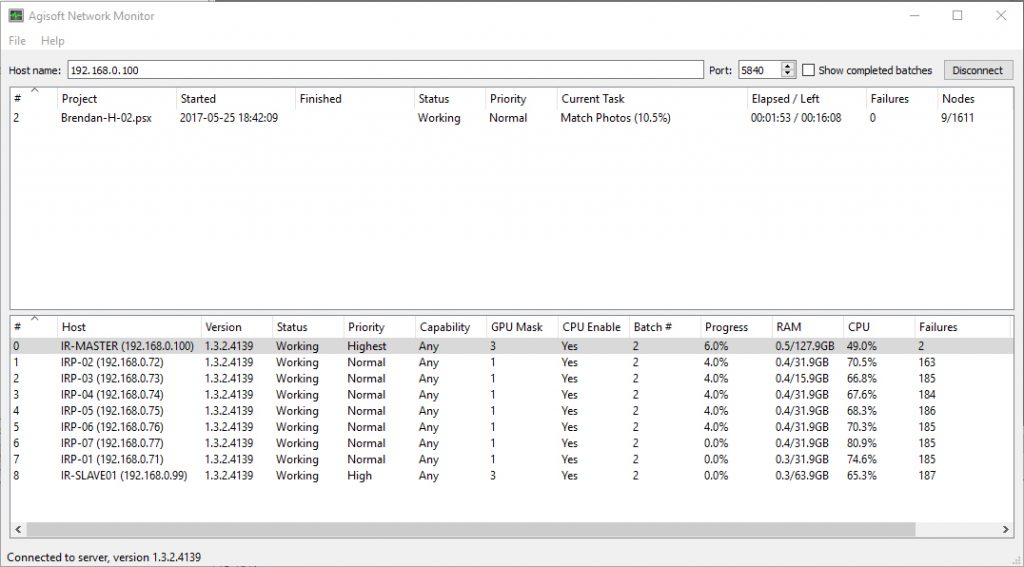

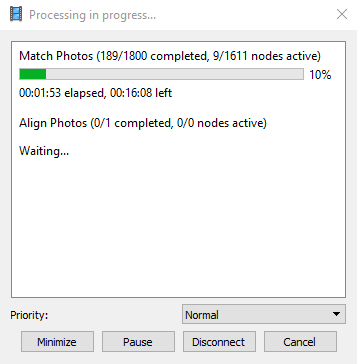

We have also recently taken advantage of network licensing and network processing in Photoscan. Previously a 1 minute 16x camera piece of motion scanning footage used to take us 5 days to process from start to finish. We can now do this in under 24 hours split over 8 PC’s. To reiterate that’s 1800x scans, using over 28,800x 20MP (20MB each) images, that’s 576GB of data.. per take. Using SSD’s and a 10Gbit network we can chew through this data no problem.

As we use machine vision cameras, the data we capture is in RAW form in DNG format. This data has to be post processed first before it can be input to Photoscan. The images needed to have debayering applied, colour corrected, exposure corrected, noise removed, sharpen applied, camera profile added etc We first tested a pipeline using Adobe Camera Raw. This was buggy. We used After Effects, this was too slow. We settled on Lightroom for a while, this was slow but reliable. To process 28,000 images took over 10 hours in Lightroom and the UI cumbersome for sequence data. We nearly got around to Nuke but somethign else came along…

..then we found out about FastVideo. FastVideo is lightning fast it can process sequenced data on the fly, on the GPU in real-time in ms, rather than in minutes per frame. This means we can now process a 28,800 image sequence set in under 10 minutes, instead of 10 hours. Literally a game changer. We would also like to link to www.fastcinemadng.com which is where you can download and test the Fast CinemaDNG application.

**EDIT: 2019/01/05″

Fast Video now supports colour and exposure pickers as well as EXIF and image rotation tool sets.

This is a limited prototype system to act as a proof of concept. We’ve done the ground work and the system is getting closer to becoming a commercial reality. It now just needs to be scaled.

What’s the point of all this? Digital Human Research. Our continued efforts to try and understand and record humans in digital form. The AEON Motion Scanning System at the moment has a dual purpose. High fidelity facial analysis perfect to experience in VR and AR.

The AEON Motion Scanning System opens up some interesting possibilities for high fidelity performance capture and virtual story telling, the next evolution of human scanning.

In our next blog post we hope to share with you some sample Unity scenes to be played back on your PC or in VR.

Thanks for reading